IPPSR’s State of the State Survey (SOSS) suggested an easy path to victory for Hillary Clinton in Michigan’s 2016 general election, but Donald Trump won the state by a narrow margin on his way to an Electoral College victory. Our survey was hardly alone in showing a Clinton lead (only one Michigan public survey in all of 2016 showed a Trump lead), but SOSS was the furthest off from the final result. In the election’s aftermath, observers are investigating why state polls in the upper Midwest differed from the election outcome. Although national polls were largely accurate, state polls in consequential states were substantially off. IPPSR hopes to learn from the experience and is pleased to be participating in a committee review of state polls by the American Association for Public Opinion Research.

SOSS is a regular and multi-topic quarterly survey of Michigan adults conducted over a long field period (for these analyses, we use interviews from September 1st to Election Day) by landline and cell phone. To maintain comparable longitudinal data, we have used the same weighting process since the 1990s. One important reason our survey (and others) may have erred this year is that we did not weight by education level (instead using our longstanding weighting process). To assess the impact, we re-weighted the survey by adding a weight for a five-category education variable (from below high school to graduate degree).

Our results (among 796 likely voters) showed a narrower margin when adding the weight for education, especially when four candidates were listed (including Libertarian Gary Johnson and Green Party candidate Jill Stein). In an effort to learn respondents’ preferences between the two main candidates, we first asked everyone to select between Clinton and Trump in a one-on-one race (including a follow-up question asking respondents who they would select if forced to choose) before asking everyone to select among the four candidates. But results using both weights for both questions still show Clinton significantly ahead, suggesting that education weighting was not the full explanation.

Another important difficulty is significant changes in the race during the field period. Both candidates spent considerably more time and advertising dollars in Michigan during the final week of the campaign, suggesting that they saw the race closing dramatically as Election Day approached (for instance, neither candidate spent money on local television ads except in the last week, when they spent $5 million). Some have also attributed late campaign movement nationally to events in the final days of the campaign, especially letters from FBI Director James Comey. Unfortunately, our survey did not interview a consistent number of respondents each week and interviewed fewer respondents (and likely voters) in the final two weeks. The chart below shows Clinton’s margin over Trump among likely voters each week based on different weightings.

Our results show a highly variable race from week to week (based in part on small sample sizes, as should be expected). The unweighted data for the one-on-one race shows Clinton ahead until the final week. Interestingly, this unweighted version makes the race appear more stable and narrow. The weighting without education shows a more significant Clinton lead. The final weighted data shows more volatility. It is thus possible that non-response biases correctly reflected the likely electorate, with weighting doing more harm than good. There is an interesting division based on weights in the period following the release of the Access Hollywood tape of Trump speaking about women (on 10/7) and the second presidential debate (on 10/9). During this period, lower-education respondents were much more likely to support Trump than higher-education respondents. Given the small samples, the week-to-week variation likely includes sampling error, opinion change, and differential response from each candidate’s supporters (with people agreeing to participate in the survey based on their candidate’s perceived performance).

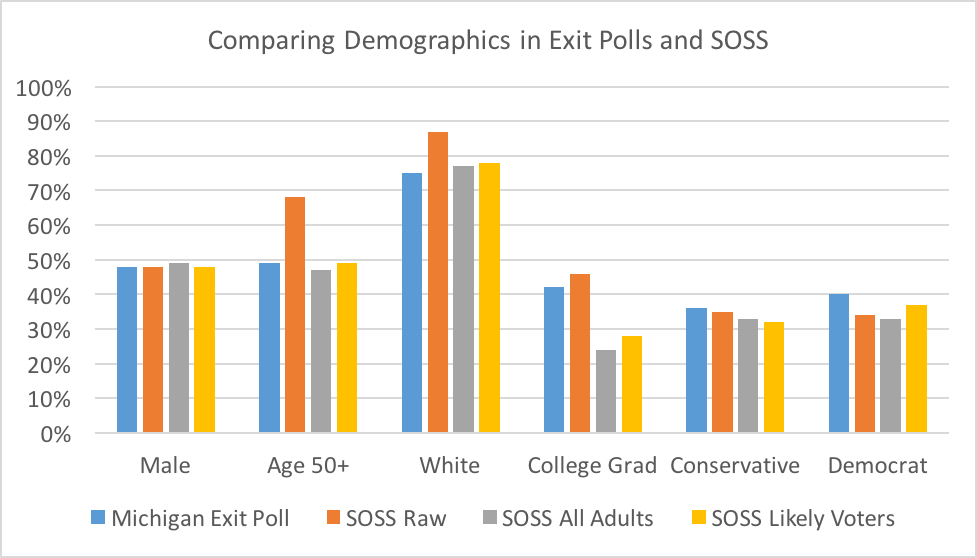

Another possibility for why the polls failed to match Michigan’s outcome is that they did not anticipate the electorate. Our likely voter indicator simply asked people how likely they were to vote on a scale of one to ten, but we did not observe major differences in results based on how likely people said they were to vote (and the most likely voters were actually more supportive of Clinton). To help assess the influence of the electorate, we compared the demographics and political identifications in our survey to those in the media exit polls. The graph below includes data from our raw data (all respondents, unweighted), our weighted data regardless of likelihood to vote (all adults), and our weighted data from likely voters.

There were no significant differences in gender, ideology, or partisanship between our survey and exit polls. The weighting effectively matched the age and race of the electorate (regardless of likelihood to vote). Interestingly, the raw data most closely matched the high level of education in the electorate (as measured by exit polls). Yet exit polls are hardly definitive and have historically overestimated the number of participating college graduates and minority voters. Overall, there is not much evidence that the electorate turned out substantially different than expected from our survey.

SOSS calls both landline and cellular telephones and recalls a subset of respondents from a previous survey the year before, providing a small panel component. We assessed whether our strong results for Clinton could partially be the result of recalling prior survey respondents. Below, we report the results based on the sample type. We find a significant divide between cellular respondents re-contacted from our prior survey and new cellular respondents, with new cellular respondents much less favorable to Clinton. Yet, among landline respondents, new respondents were actually more favorable to Clinton. New cellular respondents were more likely to be reached later in the campaign, so this may be an effect of interview date rather than sample type (there is too much day-to-day variation in the state of the race to be sure).

Another theory of how the polls underestimated Trump support is that some voters declined to voice their support for Trump. Our survey showed substantially more third party support and undecided voters than usual, with third party voters mostly failing to materialize on Election Day (though some people did cast a ballot without voting for president). We have no way to directly test the presence of shy Trump voters: respondents who may have supported Trump but did not want to tell pollsters because they thought it was socially unacceptable.

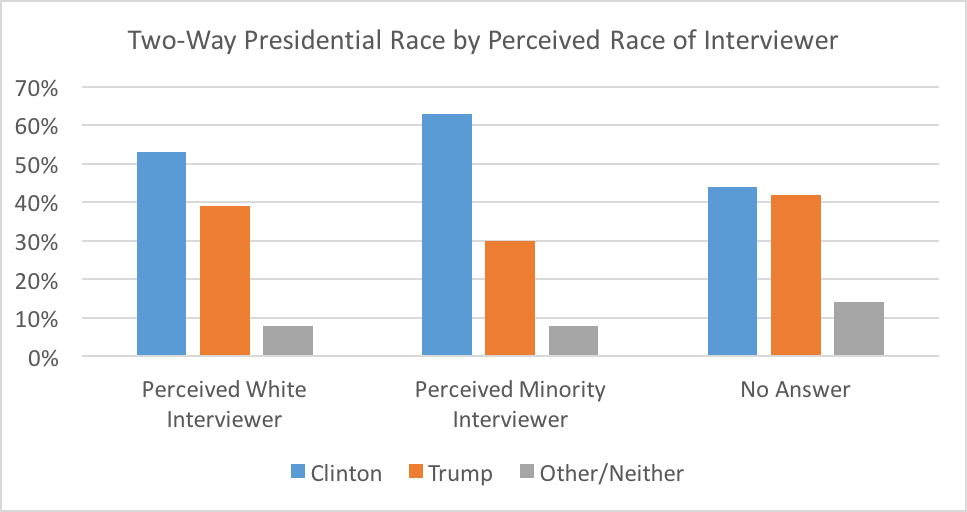

Yet we did collect some data that might help assess social desirability bias: we recorded interviewers’ race and gender; interviewers also asked respondents to assess the interviewer’s racial background. More than half (58%) of our likely voter interviews were conducted by racial minority interviewers and 63 percent were conducted by women. As revealed below, we observed no major differences in likely voters’ candidate preference based on the race or gender of their interviewer (as reported by the interviewers themselves). The same was true of preferences in the four-candidate contest (not pictured).

Yet there was a relationship between respondents’ perceptions of interviewer race and respondents’ vote choice (reported below for all likely voters). Respondents who thought they were speaking with a white interviewer voiced more narrow support for Hillary Clinton than those who thought they were speaking with a racial minority interviewer (results pictured among likely voters of all races). Those who either said they did not know the race of their interviewer or refused to answer (a full 45 percent of respondents) were roughly even in their support of Clinton and Trump.

The differences in candidate support based on perceived race of interviewer were particularly strong among white voters, with whites who thought they were speaking to a minority interviewer favoring Clinton by a 12% margin, but whites perceiving a white interviewer favoring Trump by a 7% margin. Respondents who thought they were speaking to a racial minority interviewer also perceived greater levels of discrimination (in our racial resentment index) and were less ethnocentric (reporting less negative attitudes toward racial minorities), while those who thought they were speaking with a white interviewer reported more racial resentment and ethnocentrism.

White respondents who thought they were speaking to a minority interviewer may have reported more socially desirable views on the presidential race, but the combined results suggest a different explanation. Although most respondents who offered an opinion on the likely race of their interviewer judged correctly, there was a pattern in those giving no answer or saying they did not know. When speaking to a minority interviewer, Trump voters were less likely to report their perception of their interviewer’s race than non-Trump voters. When speaking to a white interviewer, there was no clear pattern. Trump voters may thus have been reluctant to acknowledge when they were speaking to a minority interviewer, even if they correctly reported their presidential preference.

The evidence regarding our polling miss in Michigan is hardly definitive, but there is some suggestive data to support several common theories. First, weighting by education does suggest a closer race (and perhaps a distinct reaction to the Access Hollywood tape among lower-education voters). Second, the race was highly volatile with Clinton performing better before the final week but Trump likely gaining at then end after the Comey letter and more extensive Michigan campaigning. Third, there is suggestive evidence of social desirability bias, especially in responses to minority interviews.

Our survey was an outlier among pre-election polls in Michigan (giving Clinton a greater lead). Sampling error or unknown characteristics of our survey methodology could be responsible. We are sharing the information we do have in the hopes of helping with the broader attempt to understand sources of survey error in 2016. Any definitive accounting will involve looking at many other sources of evidence beyond our survey. We anxiously await others’ analyses of 2016 polling errors and of changes in the final weeks of Michigan’s campaign.